Saving, loading and logging¶

In this section, you will find the information you need to log data with TensorBoard or Weights & Biases and to save and load checkpoints and memories to and from persistent storage.

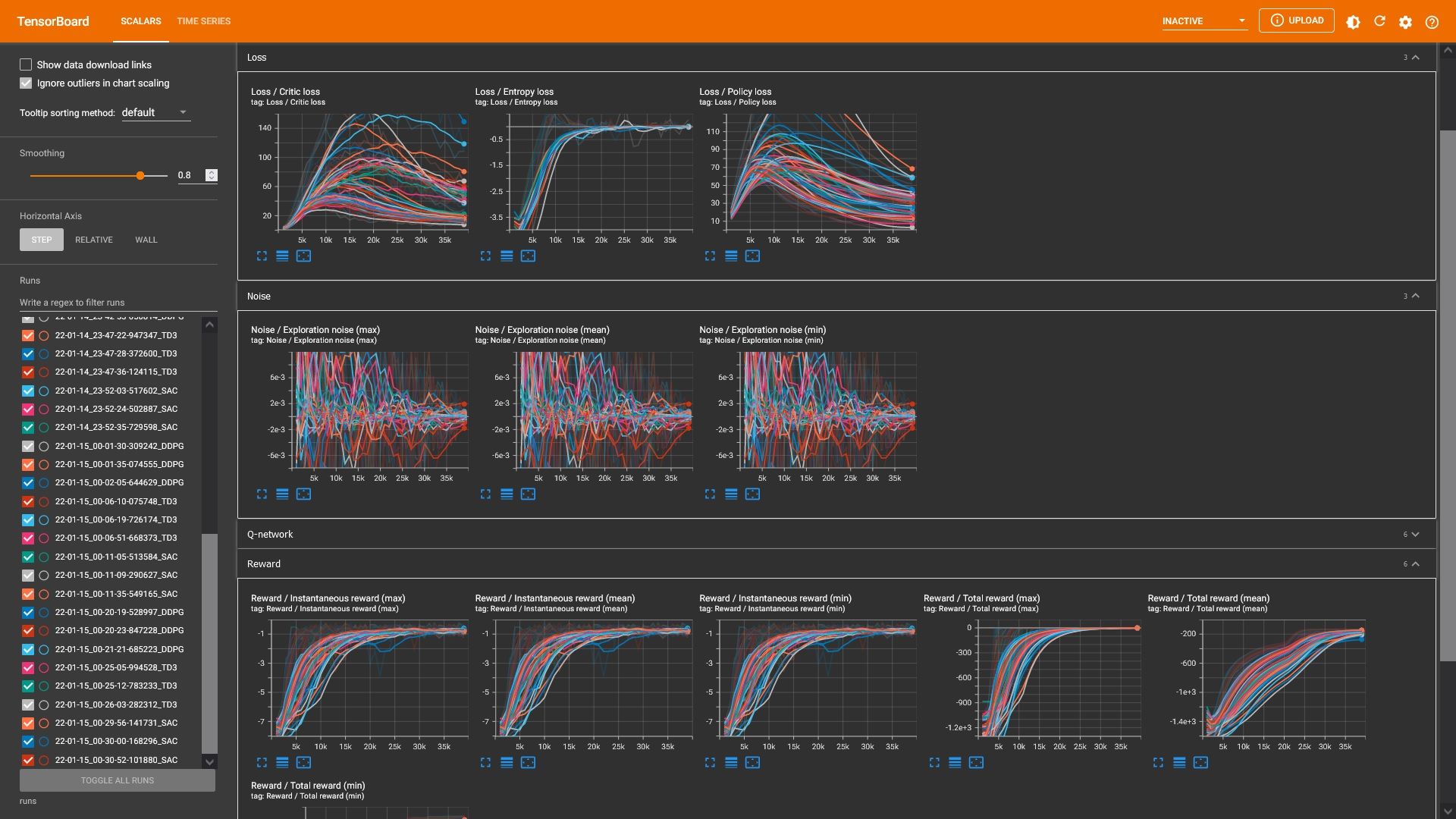

TensorBoard integration¶

TensorBoard is used for tracking and visualizing metrics and scalars (coefficients, losses, etc.). The tracking and writing of metrics and scalars is the responsibility of the agents (can be customized independently for each agent using its configuration dictionary).

Note

A standalone JAX installation does not include any package for writing events to Tensorboard. In this case it is necessary to install (if not installed) one of the following frameworks/packages:

Configuration¶

Each agent offers the following parameters under the "experiment" key:

DEFAULT_CONFIG = {

# ...

"experiment": {

"directory": "", # experiment's parent directory

"experiment_name": "", # experiment name

"write_interval": 250, # TensorBoard writing interval (timesteps)

"checkpoint_interval": 1000, # interval for checkpoints (timesteps)

"store_separately": False, # whether to store checkpoints separately

"wandb": False, # whether to use Weights & Biases

"wandb_kwargs": {} # wandb kwargs (see https://docs.wandb.ai/ref/python/init)

}

}

directory: directory path where the data generated by the experiments (a subdirectory) are stored. If no value is set, the

runsfolder (inside the current working directory) will be used (and created if it does not exist).experiment_name: name of the experiment (subdirectory). If no value is set, it will be the current date and time and the agent’s name (e.g.

22-01-09_22-48-49-816281_DDPG).write_interval: interval for writing metrics and values to TensorBoard (default is 250 timesteps). A value equal to or less than 0 disables tracking and writing to TensorBoard.

Tracked metrics/scales visualization¶

To visualize the tracked metrics/scales, during or after the training, TensorBoard can be launched using the following command in a terminal:

tensorboard --logdir=PATH_TO_RUNS_DIRECTORY

The following table shows the metrics/scales tracked by each agent ([+] all the time, [-] only when such a function is enabled in the agent’s configuration):

Tag |

Metric / Scalar |

A2C |

AMP |

CEM |

DDPG |

DDQN |

DQN |

PPO |

Q-learning |

SAC |

SARSA |

TD3 |

TRPO |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

Coefficient |

Entropy coefficient |

+ |

|||||||||||

Return threshold |

+ |

||||||||||||

Mean disc. returns |

+ |

||||||||||||

Episode |

Total timesteps |

+ |

+ |

+ |

+ |

+ |

+ |

+ |

+ |

+ |

+ |

+ |

+ |

Exploration |

Exploration noise |

+ |

+ |

||||||||||

Exploration epsilon |

+ |

+ |

|||||||||||

Learning |

Learning rate |

+ |

+ |

– |

– |

– |

– |

||||||

Policy learning rate |

– |

– |

– |

||||||||||

Critic learning rate |

– |

– |

– |

||||||||||

Return threshold |

– |

||||||||||||

Loss |

Critic loss |

+ |

+ |

+ |

|||||||||

Entropy loss |

– |

– |

– |

– |

|||||||||

Discriminator loss |

+ |

||||||||||||

Policy loss |

+ |

+ |

+ |

+ |

+ |

+ |

+ |

+ |

|||||

Q-network loss |

+ |

+ |

|||||||||||

Value loss |

+ |

+ |

+ |

+ |

|||||||||

Policy |

Standard deviation |

+ |

+ |

+ |

+ |

||||||||

Q-network |

Q1 |

+ |

+ |

+ |

|||||||||

Q2 |

+ |

+ |

|||||||||||

Reward |

Instantaneous reward |

+ |

+ |

+ |

+ |

+ |

+ |

+ |

+ |

+ |

+ |

+ |

+ |

Total reward |

+ |

+ |

+ |

+ |

+ |

+ |

+ |

+ |

+ |

+ |

+ |

+ |

|

Target |

Target |

+ |

+ |

+ |

+ |

+ |

Tracking custom metrics/scales¶

Tracking custom data attached to the agent’s control and timing logic (recommended)

Although the TensorBoard’s writing control and timing logic is controlled by the base class Agent, it is possible to track custom data. The

track_datamethod can be used (see Agent class for more details), passing as arguments the data identification (tag) and the scalar value to be recorded.For example, to track the current CPU usage, the following code can be used:

# assuming agent is an instance of an Agent subclass agent.track_data("Resource / CPU usage", psutil.cpu_percent())

Tracking custom data directly to Tensorboard

It is also feasible to access directly to the SummaryWriter instance through the

writerproperty if it is desired to write directly to Tensorboard, avoiding the base class’s control and timing logic.For example, to write directly to TensorBoard:

# assuming agent is an instance of an Agent subclass agent.writer.add_scalar("Resource / CPU usage", psutil.cpu_percent(), global_step=1000)

Weights & Biases integration¶

Weights & Biases (wandb) is also supported for tracking and visualizing metrics and scalars. Its configuration is responsibility of the agents (can be customized independently for each agent using its configuration dictionary).

Follow the steps described in Weights & Biases documentation (Set up wandb) to login to the wandb library on the current machine.

Note

The wandb library is not installed by default. Install it in a Python 3 environment using pip as follows:

pip install wandb

Configuration¶

Each agent offers the following parameters under the "experiment" key. Visit the Weights & Biases documentation for more details about the configuration parameters.

DEFAULT_CONFIG = {

# ...

"experiment": {

"directory": "", # experiment's parent directory

"experiment_name": "", # experiment name

"write_interval": 250, # TensorBoard writing interval (timesteps)

"checkpoint_interval": 1000, # interval for checkpoints (timesteps)

"store_separately": False, # whether to store checkpoints separately

"wandb": False, # whether to use Weights & Biases

"wandb_kwargs": {} # wandb kwargs (see https://docs.wandb.ai/ref/python/init)

}

}

wandb: whether to enable support for Weights & Biases.

wandb_kwargs: keyword argument dictionary used to parameterize the wandb.init function. If no values are provided for the following parameters, the following values will be set for them:

"name": will be set to the name of the experiment directory."sync_tensorboard": will be set toTrue."config": will be updated with the configuration dictionaries of both the agent (and its models) and the trainer. The update will be done even if a value has been set for the parameter.

Checkpoints¶

Saving checkpoints¶

The checkpoints are saved in the checkpoints subdirectory of the experiment’s directory (its path can be customized using the options described in the previous subsection). The checkpoint name is the key referring to the agent (or models, optimizers and preprocessors) and the current timestep (e.g. runs/22-01-09_22-48-49-816281_DDPG/checkpoints/agent_2500.pt).

The checkpoint management, as in the previous case, is the responsibility of the agents (can be customized independently for each agent using its configuration dictionary).

DEFAULT_CONFIG = {

# ...

"experiment": {

"directory": "", # experiment's parent directory

"experiment_name": "", # experiment name

"write_interval": 250, # TensorBoard writing interval (timesteps)

"checkpoint_interval": 1000, # interval for checkpoints (timesteps)

"store_separately": False, # whether to store checkpoints separately

"wandb": False, # whether to use Weights & Biases

"wandb_kwargs": {} # wandb kwargs (see https://docs.wandb.ai/ref/python/init)

}

}

checkpoint_interval: interval for checkpoints (default is 1000 timesteps). A value equal to or less than 0 disables the checkpoint creation.

store_separately: if set to

True, all the modules that an agent contains (models, optimizers, preprocessors, etc.) will be saved each one in a separate file. By default (False) the modules are grouped in a dictionary and stored in the same file.

Checkpointing the best models

The best models, attending the mean total reward, will be saved in the checkpoints subdirectory of the experiment’s directory. The checkpoint name is the word best and the key referring to the model (e.g. runs/22-01-09_22-48-49-816281_DDPG/checkpoints/best_agent.pt).

The best models are updated internally on each TensorBoard writing interval "write_interval" and they are saved on each checkpoint interval "checkpoint_interval". The "store_separately" key specifies whether the best modules are grouped and stored together or separately.

Loading checkpoints¶

Checkpoints can be loaded (e.g. to resume or continue training) for each of the instantiated agents (or models) independently via the .load(...) method (Agent.load or Model.load). It accepts the path (relative or absolute) of the checkpoint to load as the only argument. The checkpoint will be dynamically mapped to the device specified as argument in the class constructor (internally the torch load’s map_location method is used during loading).

Note

The agents or models instances must have the same architecture/structure as the one used to save the checkpoint. The current implementation load the model’s state-dict directly.

Note

Warnings such as [skrl:WARNING] Cannot load the <module> module. The agent doesn't have such an instance can be ignored without problems during evaluation. The reason for this is that during the evaluation not all components, such as optimizers or other models apart from the policy, may be defined.

The following code snippets show how to load the checkpoints through the instantiated agent (recommended) or models. See the Examples section for showcases about how to checkpoints and use them to continue the training or evaluate experiments.

from skrl.agents.torch.ppo import PPO

# Instantiate the agent

agent = PPO(models=models, # models dict

memory=memory, # memory instance, or None if not required

cfg=agent_cfg, # configuration dict (preprocessors, learning rate schedulers, etc.)

observation_space=env.observation_space,

action_space=env.action_space,

device=env.device)

# Load the checkpoint

agent.load("./runs/22-09-29_22-48-49-816281_DDPG/checkpoints/agent_1200.pt")

from skrl.agents.jax.ppo import PPO

# Instantiate the agent

agent = PPO(models=models, # models dict

memory=memory, # memory instance, or None if not required

cfg=agent_cfg, # configuration dict (preprocessors, learning rate schedulers, etc.)

observation_space=env.observation_space,

action_space=env.action_space,

device=env.device)

# Load the checkpoint

agent.load("./runs/22-09-29_22-48-49-816281_DDPG/checkpoints/agent_1200.pickle")

from skrl.models.torch import Model, DeterministicMixin

# Define the model

class Policy(DeterministicMixin, Model):

def __init__(self, observation_space, action_space, device, clip_actions=False):

Model.__init__(self, observation_space, action_space, device)

DeterministicMixin.__init__(self, clip_actions)

self.net = nn.Sequential(nn.Linear(self.num_observations, 32),

nn.ReLU(),

nn.Linear(32, 32),

nn.ReLU(),

nn.Linear(32, self.num_actions))

def compute(self, inputs, role):

return self.net(inputs["states"]), {}

# Instantiate the model

policy = Policy(env.observation_space, env.action_space, env.device, clip_actions=True)

# Load the checkpoint

policy.load("./runs/22-09-29_22-48-49-816281_DDPG/checkpoints/2500_policy.pt")

from skrl.models.jax import Model, DeterministicMixin

# Define the model

class Policy(DeterministicMixin, Model):

def __init__(self, observation_space, action_space, device=None, clip_actions=False, **kwargs):

Model.__init__(self, observation_space, action_space, device, **kwargs)

DeterministicMixin.__init__(self, clip_actions)

@nn.compact # marks the given module method allowing inlined submodules

def __call__(self, inputs, role):

x = nn.Dense(32)(inputs["states"])

x = nn.relu(x)

x = nn.Dense(32)(x)

x = nn.relu(x)

x = nn.Dense(self.num_actions)(x)

return x, {}

# Instantiate the model

policy = Policy(env.observation_space, env.action_space, env.device, clip_actions=True)

# Load the checkpoint

policy.load("./runs/22-09-29_22-48-49-816281_DDPG/checkpoints/2500_policy.pickle")

In addition, it is possible to load, through the library utilities, trained agent checkpoints from the Hugging Face Hub (huggingface.co/skrl). See the Hugging Face integration for more information.

from skrl.agents.torch.ppo import PPO

from skrl.utils.huggingface import download_model_from_huggingface

# Instantiate the agent

agent = PPO(models=models, # models dict

memory=memory, # memory instance, or None if not required

cfg=agent_cfg, # configuration dict (preprocessors, learning rate schedulers, etc.)

observation_space=env.observation_space,

action_space=env.action_space,

device=env.device)

# Load the checkpoint from Hugging Face Hub

path = download_model_from_huggingface("skrl/OmniIsaacGymEnvs-Cartpole-PPO", filename="agent.pt")

agent.load(path)

from skrl.agents.jax.ppo import PPO

from skrl.utils.huggingface import download_model_from_huggingface

# Instantiate the agent

agent = PPO(models=models, # models dict

memory=memory, # memory instance, or None if not required

cfg=agent_cfg, # configuration dict (preprocessors, learning rate schedulers, etc.)

observation_space=env.observation_space,

action_space=env.action_space,

device=env.device)

# Load the checkpoint from Hugging Face Hub

path = download_model_from_huggingface("skrl/OmniIsaacGymEnvs-Cartpole-PPO", filename="agent.pickle")

agent.load(path)

Migrating external checkpoints¶

It is possible to load checkpoints generated with external reinforcement learning libraries into skrl agents (or models) via the .migrate(...) method (Agent.migrate or Model.migrate).

Note

In some cases it will be necessary to specify a parameter mapping, especially in ambiguous models (where 2 or more parameters, for source or current model, have equal shape). Refer to the respective method documentation for more details in these cases.

The following code snippets show how to migrate checkpoints from other libraries to the agents or models implemented in skrl:

from skrl.agents.torch.ppo import PPO

# Instantiate the agent

agent = PPO(models=models, # models dict

memory=memory, # memory instance, or None if not required

cfg=agent_cfg, # configuration dict (preprocessors, learning rate schedulers, etc.)

observation_space=env.observation_space,

action_space=env.action_space,

device=env.device)

# Migrate a rl_games checkpoint

agent.migrate(path="./runs/Cartpole/nn/Cartpole.pth")

from skrl.models.torch import Model, DeterministicMixin

# Define the model

class Policy(DeterministicMixin, Model):

def __init__(self, observation_space, action_space, device, clip_actions=False):

Model.__init__(self, observation_space, action_space, device)

DeterministicMixin.__init__(self, clip_actions)

self.net = nn.Sequential(nn.Linear(self.num_observations, 32),

nn.ReLU(),

nn.Linear(32, 32),

nn.ReLU(),

nn.Linear(32, self.num_actions))

def compute(self, inputs, role):

return self.net(inputs["states"]), {}

# Instantiate the model

policy = Policy(env.observation_space, env.action_space, env.device, clip_actions=True)

# Migrate a rl_games checkpoint (only the model)

policy.migrate(path="./runs/Cartpole/nn/Cartpole.pth")

# or migrate a stable-baselines3 checkpoint

policy.migrate(path="./ddpg_pendulum.zip")

# or migrate a checkpoint of any other library

state_dict = torch.load("./external_model.pt")

policy.migrate(state_dict=state_dict)

Memory export/import¶

Exporting memories¶

Memories can be automatically exported to files at each filling cycle (before data overwriting is performed). Its activation, the output files’ format and their path can be modified through the constructor parameters when an instance is created.

from skrl.memories.torch import RandomMemory

# Instantiate a memory and enable its export

memory = RandomMemory(memory_size=16,

num_envs=env.num_envs,

device=device,

export=True,

export_format="pt",

export_directory="./memories")

from skrl.memories.jax import RandomMemory

# Instantiate a memory and enable its export

memory = RandomMemory(memory_size=16,

num_envs=env.num_envs,

device=device,

export=True,

export_format="np",

export_directory="./memories")

export: enable or disable the memory export (default is disabled).

export_format: the format of the exported memory (default is

"pt"). Supported formats are PyTorch ("pt"), NumPy ("np") and Comma-separated values ("csv").export_directory: the directory where the memory will be exported (default is

"memory").

Importing memories¶

TODO (coming soon)